광케이블 인증 테스트: 손실, 길이(그리고 때로는 반사율)만 있으면 됩니다

2021년 1월 19일 / General, Standard and Certification, Best Practices

As data speeds increase and new fiber applications emerge, there has been some confusion surrounding fiber testing parameters and whether insertion loss testing is enough to guarantee support for high-speed applications.

Other than new short-reach singlemode applications that are more susceptible to reflections and therefore take connector reflectance into consideration, insertion loss testing, length and polarity are really all you need for Tier 1 certification testing.

It’s What the Standards Look At

Measured in decibels ((dB), insertion loss is the reduction in signal power that happens along any length of cable for any type of transmission. And the longer the length, the more a signal is reduced (or attenuated) by the time it reaches the far end. In addition to length, events along the way that cause reflections also contribute to overall loss, including connectors, splices, splitters and bends.

The reason we care so much about insertion loss for fiber links is that to adequately support an application, the signal must have enough power for the receiver to interpret it. In fact, all IEEE fiber applications specify overall channel and connector loss limits—it is the single-most important parameter that determines the performance of practically every fiber application and is the critical parameter needed when conducting Tier 1 certification testing with your CertiFiber® Pro Optical Loss Test Set.

It’s important to note that maximum allowable insertion loss varies based on the application, and higher-speed applications have more stringent insertion loss requirements. Because insertion loss is directly related to length, higher-speed applications also have reduced distance limitations—the IEEE essentially balances loss and distance requirements to meet the majority of installations. For example, 10 Gb/s multimode (10GBASE-SR) applications have a maximum channel insertion loss of 2.9 dB over 400 meters of OM4 multimode fiber, while 40 Gb/s multimode (40GBASE-SR4) applications have a maximum channel insertion loss of 1.5 dB over just 150 meters of OM4.

The Role of Length

If the insertion loss is low enough, that means that the signal can be detected at the far end of the link. So why does length matter? Two reasons. First, the proper operation of the communications protocol is based on an expectation that signals will be received at the far end within a specified time. Longer lengths mean greater delays. Second, dispersion of the waveform as it travels through the fiber can distort it to the point that the receiver can’t tell the difference between a one and a zero. This is tied to the fiber’s modal bandwidth – more on that below. Standards designers therefore limit the length of the link based on the dispersion characteristics for the type of fiber. That’s why 100GBASE-SR4 is limited to 70 meters on OM3 and 100 meters on OM4 or OM5.

Except When They Also Consider Reflectance

While fiber connectors require a certain reflectance performance to comply with industry component standards, it is not something you typically need to test for with the exception of new short-reach singlemode applications. While multimode transceivers are extremely tolerant of reflection, singlemode transceivers are not. And low-cost, low-power singlemode transceivers used in short-reach applications like 100GBASE-DR, 200GBASE-DR4 and 400GBASE-DR4 are even more susceptible to reflectance.

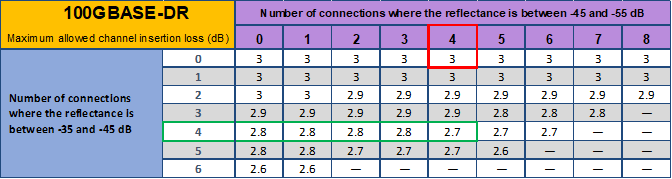

As a result, IEEE actually specifies insertion loss limits for new short-reach applications based on the number and reflectance of connections in the channel. As shown in the table, in a 100GBASE-DR4 application with four connectors that have a reflectance between -45 and -55dB, the insertion loss is 3.0dB (highlighted in red). But with four connectors that have a reflectance between -35 and -45dB, the insertion loss goes down to 2.7dB (highlighted in green).

While you can use manufacturer reflectance specifications as a general guideline when planning your loss budgets, it’s important to note that reflectance can change over time due to dirt and debris. It therefore makes sense to build in some margin. When it comes to testing, you’ll need to test the reflectance of specific connections with your OptiFiber® Pro OTDR as part of Tier 2 testing, which is recommended for short-reach single-mode applications and as part of a complete testing strategy.

What About Bandwidth Testing?

The bandwidth of fiber is specified as modal bandwidth, or effective modal bandwidth (EMB), which refers to how much data a specific fiber can transmit at a given wavelength. Fiber applications are specified for use with a minimum bandwidth fiber. Bandwidth testing is done by fiber manufacturers and involves a complex laboratory test with specialized analyzers to send and measure high-powered laser pulses—it’s costly to accurately test in the field, and you don’t need to worry about it if you stick to the standard-defined length limitations.

That’s not to say that once the fiber network is certified, up and running that you won’t test the throughput capability of a channel, but that is a test of actual connection speed—not the bandwidth of the cable itself. And if your insertion loss is still in compliance and your network isn’t performing, it’s probably worth breaking out the OTDR to check the reflectance of events on the link. If both loss and reflectance are OK, the problem is most likely with the active equipment—not the cabling. If you do have a loss problem, then it’s time to troubleshoot.

In short, insertion loss plus length (Tier 1 testing) is nearly always what will determine application support, but there may be occasions when you need to add reflectance (Tier 2 testing). That’s why the standards define only those two tiers of field testing – there really isn’t a need for more.